I’ve been sick the last week or so. It started creeping in at the end of last week and then took over the weekend and then I had to take Monday and Tuesday to recover. I’m still not really recovered tbh. I’m going to be straight with you; these are the first two days I’ve done nothing for a year or two. I tried three different video games and was disappointed by all of them. Last week I blogged a writeup of the talk I gave at Simon Fraser University, back to normal this week with some things and apologies that there’s a lot of AI in here.

Five Things

Be warned, this post is beset by ‘things I read that now can’t find’ because they predate my use of rigorous bookmarking.

1. Science fiction is about strange people, fantasy is about strange rules

Otherwise known as Chiang’s law. Venkatash Rao summarises a notion that the great science fiction author, Ted Chiang, has been tilting at for a while: If the rules of society have been adjusted or changed, through the imposition of a new system, technology or exogenous factor then you’ve got yourself a science fiction story, often explored from the perspective of one or a handful of characters. If the story focusses on the unique and special properties of an individual then it’s probably a fantasy. This tracks with the idea that there’s relatively little exploration of the wider social context of fantasy: What are the macroeconomics of Rodin? What is the socio-technical regulator regime of Oz? I read a thing ages ago which I just tried to find about who the whole of Middle Earth is roughly the size of Denmark (thing I can’t find 1) and basically nothing happens there. What is the industrial development strategy of Kings Landing? Who exactly is paying for The Wall?

Then there are tropes of course. Science fiction is associated with spaceships and lasers so something like Star Trek is science fiction in that sense but in narrative is a fantasy. Other than in DS9 no one ever talks about ‘domestic’ politics in Star Trek and since replicators can make anything out of thin air, there’s no economy to speak of. I remember reading (thing I can’t find 2) about the ‘holodeck paradox;’ if you can perfectly simulate any conceivable fantasy and live inside it, why do anything else? Presumably, outside of the handful of heroic characters, that’s what the vast majority of people are up to?

The opposite exception as Rao points out, is Discworld which, despite being dressed up in the tropes of high-fantasy, is all about rules being thrown onto an absurd society and the implications explored. What if Death took a break? What if the bureaucracy of magic stopped working? Or, as I’m reading to my kid at the moment, what if a cynical theocracy actually encountered its God? I’ll always make a case for Pratchett as the great humanist and leveller of our age. There are no ‘good’ or ‘bad’ people in Pratchett, just flawed characters (all of them) who interact with the world and its changes in different ways. And despite the high-fantasy setting, there are no ‘magic pills’ or ‘superweapons’ and even when these Macguffins do appear, they are also flawed and socially contingent in different ways. Consider the ‘gonne‘ as the Macguffin of Men at Arms which ends up as an allegorical device about corporate (guild) vs state (patrician) power and the regulation of potential dangerous technologies as well as a satire of the supposed ‘technological neutrality’ assured by its inventor.

Anyway, Rao points out that, despite what you’d think, Silicon Valley operates more on fantasy myths of great (usually) men, uniquely chosen or suited to change history being lauded and lavished with dollars to change the world despite any understanding of society and systemic change shouting loudly that this is a Bad Idea. If the litany of charlatans and conpersons who have found their way to prison is anything to go by, this fantasy worldview is only entrenching.

Interestingly, I was listening to coverage on a survey that said more people in the UK put the slow pace of change down to failures in leaders rather than systemic blockers and social dynamics. So what? We need more science fiction and less fantasy. I wonder if people have tracked the political influence of Game of Thrones I suggest we ramp up (good) TV adaptations of Pratchett ASAP, say by the time my daughter 5? Ok so you can pair this up with Karl Schroeder’s 11 (5 if you won’t pay for them) tips for weaving science fiction into futures/foresight work as well. Don’t be a fantasist, be a fictionist.

2. The unspoken cheating epidemic

This piece in the WSJ plus this episode of Data Fix with (who I describe as) ‘cheating hunter’ Kane Murdoch are basically about the apparently massive epidemic of AI-enabled cheating in education. This is made easier at lower levels (e.g. pre-graduate degree) by firstly, the size of classes lowering one-on-one contact time where lack of understanding might be spotted and secondly, everything being online post-Covid. This has made it easier for students to harvest the things they need to run through an LLM to get answers. As Murdoch points out on the podcast, it creates a cycle because most curriculums are structured as stepping stones and once a student skips out on foundational knowledge they struggle to keep up and turn more and more to cheating to pass tests. Importantly, the podcast isn’t just about so-called AI but also about organisations in Kenya and Pakistan particularly where cheating is industrialised by human-hand via WhatsApp.

This is obviously a lucrative area for startups keen to frame themselves as ‘AI research assistants’ and even the growth of LLMs that prize themselves on being un-detectable. I recently marked a bunch of work (in a place which allows the use of LLMs as long as its declared) and would confidently support the stat reported that 20-40% of it was written (undeclared) by LLM but there’s little recourse without evidence.

Will this mean a turn away from ‘teach-to-the-test’? It’ll be hard to achieve given class sizes and the industrialisation of education. In design schools, there’s normally some form of marking by portfolio which makes simply churning out a few thousand words of text less significant in the weighting of an assessment, but how would this work for marketing or sports science?

3. Post-peak literacy

Connected to the above. Is it possible that the western world has quietly passed peak literacy? This December 2024 survey shows that low-levels of literacy have increased (read: high literacy levels have decreased) since 2017 against expectations as literacy is generally seen as a stable and growing benchmark. In other words, the shocks of Covid and so on are not enough to account for a general rise in illiteracy in much of the western world. The piece focuses on the US but shows data from many other countries of low literacy increases around 5-10%.

Sort of pithy to say but this could be attributable to an 88% decline in reading amongst adults in the last decade as they increasingly turned to screens. Throw LLMs as information providers into the mix and you can see the trend’s direction.

4. The past of an AGI future

Henry Farrell here writing on the still-pressing imaginary logic of inevitable AGI and how this logic is driving the DOGE hellscape in the US. Basically, you can excuse the destruction of social institutions on the rhetoric that ‘AGI is around the corner anyway’ and so first, these institutions are not needed in this AGI future and secondly, the information these institutions possess would be much more useful being chewed through a model (presumably one that Musk is happy to provide) to train a government LLM. Seems outlandish but so did everything happening today, last week.

Farrell emphasises that AI is a social and cultural technology rather than a substantial standalone gizmo or gadget, which augments and extends existing knowledge and understanding rather than up-ending or replacing it. So by deleting the institutions that manage social relations to replace them with AI, you’re actually replacing them with… nothing.

5. Beyond the frame

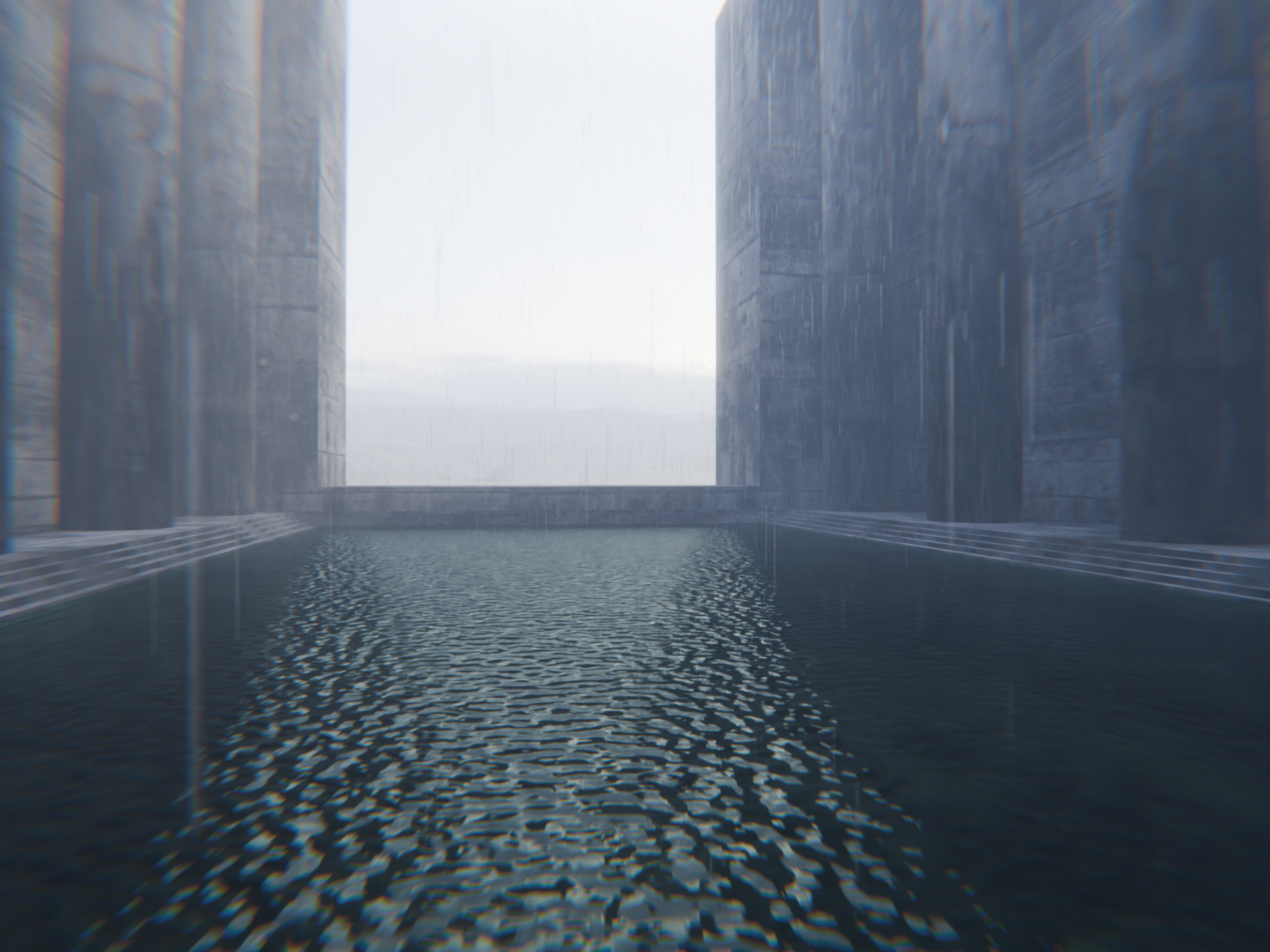

A few years ago, a the beginning of the generative AI boom, Rob Horning wrote about how generative AI implied the end of the frame; the expanded field. Effectively, that ‘framing’ would perhaps become redundant as images, texts and sounds were granted indefinite extensibility by AI. Horning talks about people running well-known classical artworks through generative AI to expand the frame but we can see TV series or music based on what you already like being endlessly extended in the same vein; a sort of reductio Shazam Effect. But as with all things there’s a counter; whether the parochial and absurd amusement of an endless conversation between Werner Herzog and Slavoj Zizek or perhaps more interestingly, the emergence of generative infinite artworks like or Hexagen.world; a generative world that expands as you scroll built in a consistent visual vernacular. They thrive on the serendipity and surprise of detail and familiarity; visual cues and tropes that spark familiarity (I know that character, I recognise that thing) but in novel arrangements. In a way, it’s a most logical art form for generative AI since these qualities of familiarity, uncanniness, discoverability and so on are native to the technology.

What am I trying to say? Generative art is certainly not new, and has been breaking into mass market through games but generative AI might be opening the door to a world of mass-produced frameless art. At the moment the focus is on taking things that are already popular in film, TV and music and simply extending them to suck up more time and attention which stay within frames of narrative, setting and screen. What new media forms might be emerging once we are not only moving generatively through time but laterally as well?

Upcoming

- Tonight is Future Days #3 where I’ll be doing my best to speak to ideas of regenerative design and community which, I’ll be frank, I struggle with. I’ll actually be at Future Days this year, for some amount of time.

Short

- A bunch of big AI bigwigs say that there shouldn’t be a concerted AGI push because it will just kick off an arms race ok I paraphrase.

- Why spend much less money on a real person when you could spend $20,000 a month for a PhD-level AI agent?

- 95% of the code coming out of stratups is AI generated ok I paraphrase a little.

- Alex Deschamps-Sonsino on International Women’s Day.

- Rachel Coldicutt on the Machine Ethics podcast

- Charlotte Kent moving beyond critique of AI and towards situating it in artistic practice. Or, I suppose, situating artistic practice around AI. She calls for slowness and ambiguity. Can’t say as I understood it all but there’s some great references.

- Such as this great story about tackling homelessness in Times Square.

- This company that has built a biocomputer for your desktop that Mona shared with me.

- Paco and Ca7riel have released a brilliant film satirising the (probably very real) anxities and pressure of their runaway Tiny Desk success.

- This two hour games costs $2k

- Europe needs to builds its own cloud.

- New anxiety-acceleration etiquette just dropped on Snap.

- BYD released a five-minute charging battery.

- Wikipedia in TikTok format might do a little thing about literacy rates who knows.

Ok, toodle pip I love you. When does everything kick in? Naomi Klein must be banging her head on the wall so hard right now.