It’s dark and cold which has the paradoxical effect of creating warmth in the things you know. Like a heat-map where the hotspots are more concentrated because the space between is markedly more bleak. From the noise of heat, the shape of warmth as it were.

It’s not complex

I’m in the midst of selling a flat and buying a house. Today or tomorrow, in fact, we might finally have the final bits of information we need to head into the final strait. We’re sort of on tenterhooks because we know all of the pieces we need but they’re not coming in the right order or are coming frustratingly slowly. Anyway, occasionally people will ask if (or tell me that) moving house is stressful but I’ve been finding this vexing because quite simply, I’m not finding it stressful at all. It’s hard work and occasionally frustrating, yes, but it’s not actually stressful. Not in the way I understand stressful things which are things that are both irresolvable and unignorable. With moving house, it’s quite easy to pick up and put down and not dwell on it. I don’t lose sleep or think myself in knots. And so I was thinking about why it is that I’m finding what is apparently one of the most stressful things a person can do, is not at all stressful.

Well here’s the thing. Because life demands a lot, my decisions are usually based around little aphorisms and algorithms I’ve collected to aid reasoning. Like, I have this thing about trying any food; if it’s an enjoyable food to someone then it must fit along a bell curve of ‘nice.’ Now, it may be more or less nice but if it has been eaten before and enjoyed then it’s perfectly possible – more than likely given bell curves, in fact – that I’ll enjoy it and so far, that has always worked and, as a result, I haven’t really ever encountered a food I don’t like*. What does this utter garbage mean for house moving, Revell, I hear you wail in agony? Well, people have moved house literally millions of times. So it’s perfectly possible to do and in fact it’s so remarkably possible-to-do that whole entire jobs and institutions are there to make it happen. So no one in this process is doing anything that never has been tried before. Therefore it fits along a curve of ‘doable’ and relative to ‘holiday on the moon,’ profoundly near the top of that curve.

(This is where I’m going to lurch into gonzo systems thinking; forgive me Mother Meadows.) Moving house is complicated, but it’s not complex. There are lots of moving parts, and some of them are conflicting and that can be frustrating. But, the process is entirely coherent, profoundly well understood and furthermore, it’s well known and understood what that process looks like when it’s running well. Another example: A bicycle is a relatively straightforward but complicated thing. [editors note; this is the second time I have edited out a motorist’s metaphor to undo the tyranny of combustion-centrism] If something goes wrong with your bicycle it can be frustrating to figure out what and repair it (unless, like myself, you enjoy solving complicated problems) but it’s been done before, the component pieces and overall system are coherent, well known and understood and you know what it looks like when it works well.

Now, restoring soil health is complex. Reducing car use in cities is complex. They are not coherent problems; they have massive amounts of externalities like weather, wealth inequality and class, tax regimes and technological investments that shape them. They are not well known and understood; there’s no one-to-one mapping of how to restore soil health or reduce car use, both are markedly contextual problems in different places. So, while working bicycles look the same everywhere and require the same kind of fixes no matter where you are, the same can’t be said of cities or grassland between South America and the Urals.

No problem

The other thing. (And I’ve known the complicated/complex divide for a while but really never thought about how it shapes the way I respond to different situations.) The other thing is that repairing bicycles, or moving houses are challenges. In a challenge (like ‘get to the top of that mountain’) you know what success is before you’ve solved it (being at the top of the mountain.) In a problem (like ‘find the two prime divisors of 149,614,657’) you don’t know the solution or outcome until after you’ve solved it (?).

So, moving house or repairing a bicycle are challenges because they have known solutions; e.g. you’ve moved house or repaired your bicycle. And both are complicated challenges because their component parts are coherent and well known. On the other hand, finding the prime divisors of a large prime is a complicated problem because the process of solving it is well known (iteratively try every combination of primes (even if it takes a loooooooooooooooooooooooooooooooooooooooooooooooooong time)) but you have no idea what the answer is until you find it.

| Challenge – Good outcome is known | Problem – Good outcome is not known | |

| Complicated – known components and constraints and variables | Repairing a bicycle or moving house. – Constraints and variables are all well-known. – What good outcome is is well known. | Prime divisors of large prime numbers. – Constraints, variables and process of solving are well-known. – Outcome is not known at beginning. |

| Complex – poorly understood components, constraints and variables | Reducing car use in cities – Constraints and variables are highly contextual, changing and poorly understood. – Outcome (lower car use) is well known. | Survival of life on Earth – Constraints and variables are riven with externalities and contestation – Uncertainty in the veracity of any solution. |

So we could draw up a map that looks a bit like this based on how much understanding of the pieces of the puzzle we have and how much understanding of what a good outcome looks like we have. Ok wait tactually he puzzle thing is even more useful metaphor. Hold on a second.

| Challenge – Good outcome is known | Problem – Good outcome is not known | |

| Complicated – known components and constraints and variables | The puzzle has eight pieces and the box has a picture on it. | The puzzle has eight pieces but there’s no picture on the box. |

| Complex – poorly understood components, constraints and variables | Only some of the puzzle pieces are in the box. There is a note with a promise to return an unknown number of missing pieces and another saying that the puzzle has not finished being made yet. The pieces that are in the box appear different sizes and may not in fact belong to the same puzzle There are some loose bits of newspaper as well but there’s a picture of the puzzle on the box. | You are given 4 large sheets of MDF and an old man shrugs at you and gruffly mutters; ‘We must make our own fun this Christmas.’ |

Yes. Something like that. So moving house is maybe unignorable in the sense that you know, if you’re committed to it, you can’t then just hope it goes away; you have to do stuff. But all the stuff you have to do is resolvable because it’s a complicated challenge. So you know, it will resolve with effort and time, therefore not stressful. Anyway, if this house move doesn’t happen soon, we must make our own fun this Christmas and it’ll be great anyway.

*There are exceptions. The famous fake vegan meet of 2013 was awful and I struggle with aubergines because I don’t like cooking them but totally fine to eat them.

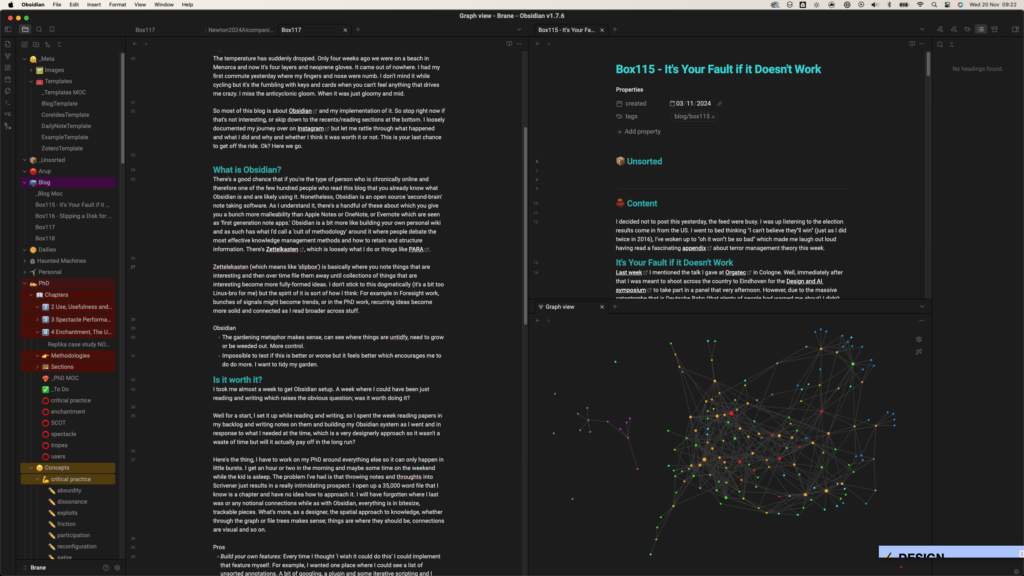

PhD

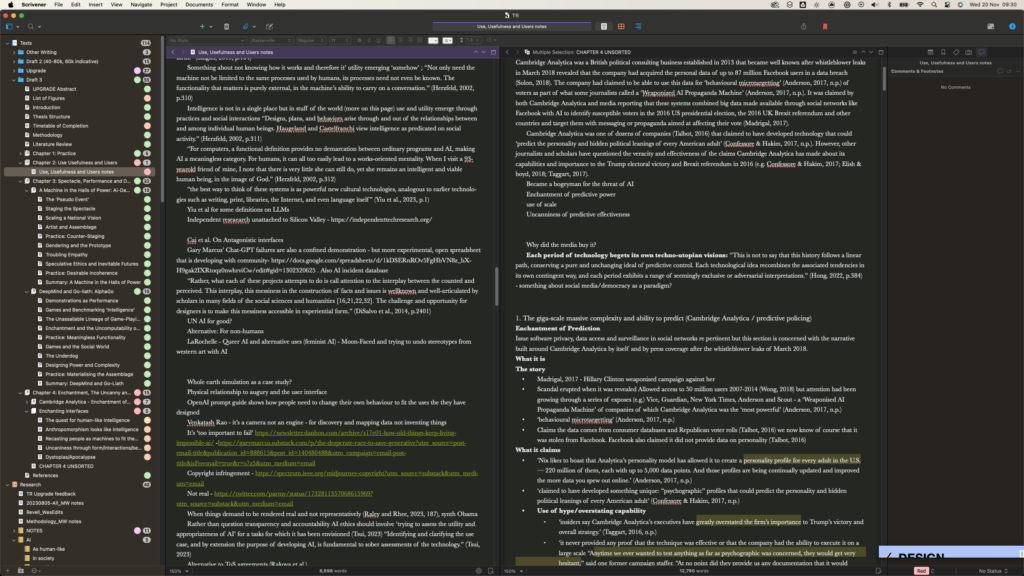

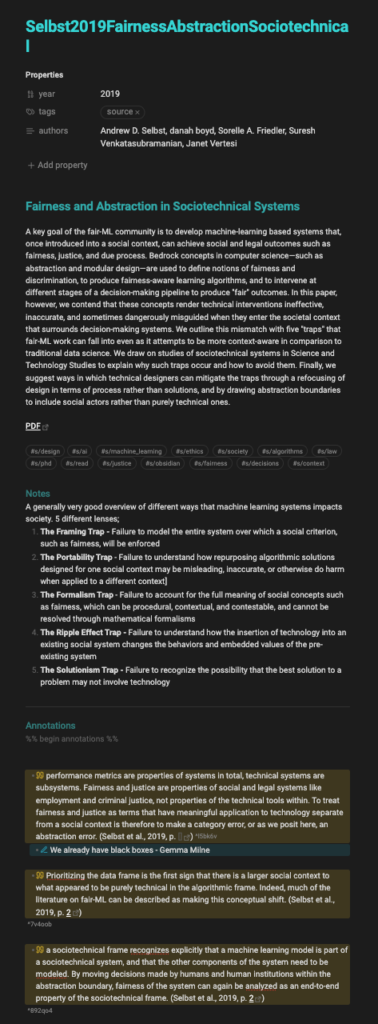

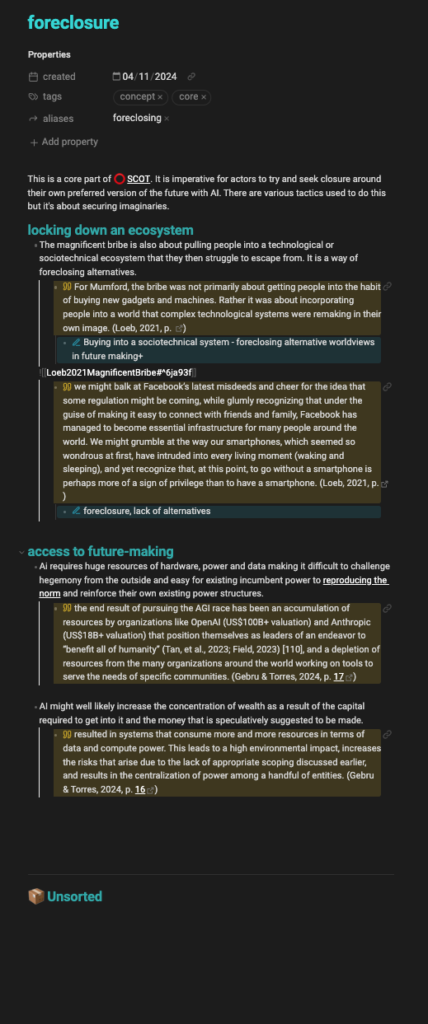

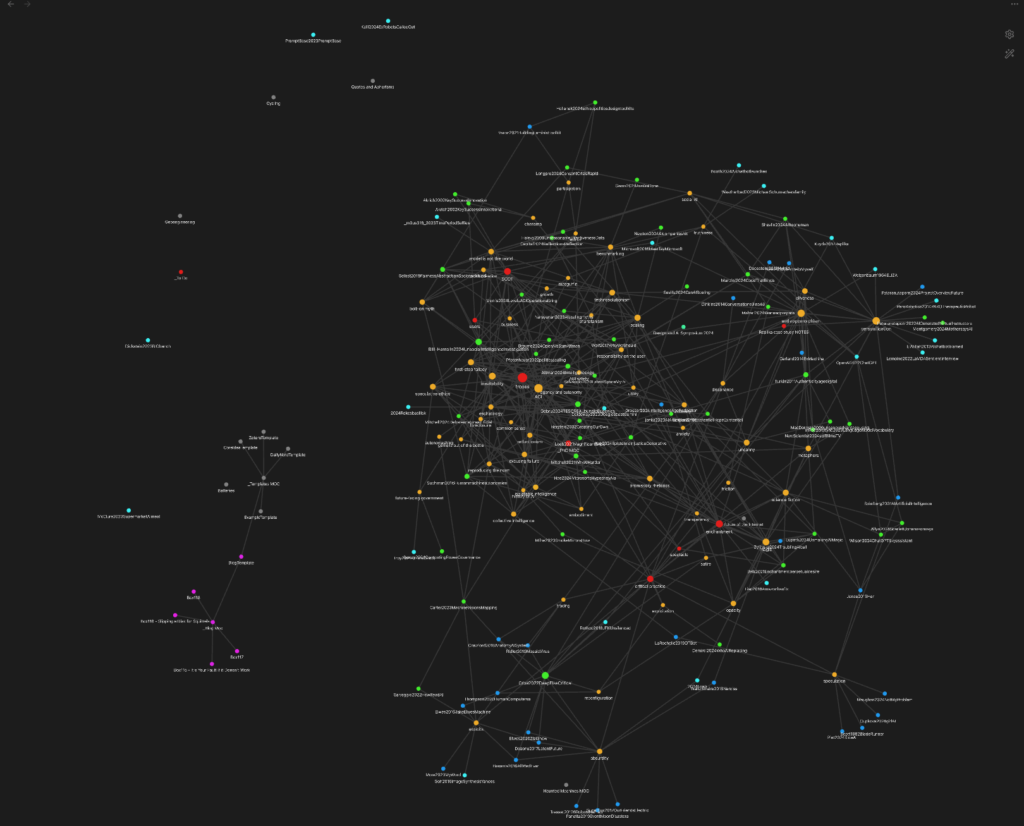

It’s really interesting tricky at the moment (speaking of complex challenges). I’m increasingly trying to tease out the subtleties of different ideas. Sometimes I worry it’s wasting time but it’s super engaging intellectually and therefore I have to believe is contributing to the overall thesis. I’m increasingly finding and developing what I keep calling concepts which are grouped by type. So, you have concepts that are myths, effects or flaws, concepts that underline idea of intelligence, futures and applications and so on and so on. But this means getting deeper and deeper into super tricky and fun nuance. For example I shared on instagram that I had managed to tease apart ‘reducibility,’ ‘reductionism’ and ‘recasting as computable’ as three different concepts of the same idea; that everything is a technical problem.

For this example, ‘reducibility’ is the rhetorical insistence that social phenomena are reducible to computational components and in my notes I’ve framed that as a rhetorical concept. ‘Reductionism’ is a ‘flaw’ of machine learning when actually applied to real-world social situations where continuous phenomena have to be captured as discrete and externalities are removed in order for machine learning to work (amongst many other things.) While ‘recasting as computable’ is an imaginary effect in society where the first two things reinforce the commonly held belief that social problems are in fact technical problems. And that’s just three of about 50 different, inter-related and nuanced concepts! Throw in exemplification (myth), standardisation (flaw), normative reproduction (effect) and a few dozen other nuanced spins on what AI does to how we think about, engage in and imagine social problems! Phoo-eeee.

I wonder if this ever ends; is there. a zero-sum number of concepts. At the moment it gernally works that a concept note gets bigger and bigger as I put more content, quotes and references in until I think ‘this is actually three things’ and split it out (as in the reductionism example above which was originally one concept.) Anyway, I’m committed to and, thus far, sticking to one hour a day at least since my binge a few weeks back and words and ideas are flowing so whether or not they make the cut, it feels like we’re moving forward and are in the cut and thrust of it.

Recents

The chapter I wrote with Julian for Practices of Future-casting has been published. The text explores ways of making imagination serious in a business setting and the value it brings. We’re going to be recording a podcast about it at some point. There’s other things honestly but I need to go to bed now.

Reading

Not many. As above, bed time. As above, thinking, writing, nuancing. Reading on pause.

- Another one I’ve re-read in my PhD work recently; From Rules to Examples from Schwerzmann and Campolo explores how the move from rules-based computation and calculation (things are sure and such a way) to machine learning’s reliance on exemplars (thing ought to be such and such a way) create or reinforce normative orders for the world. Also, The Nooscope Manifested from Pasquellini and Joler is another one I’ve revisited recently which was super formative in my PhD thinking.

- FOMO is Not a Strategy. Rachel Coldicutt’s sober analysis of the state of AI, getting ahead of management consultant and tech firm end of year hype cycles: “the absolute nonstop cavalcade of Big Tech hype means there is a lot to cut through just now. In particular, there is a barrel-load of shamelessly over-optimistic sales and marketing that is both bending economic policy.”

Ok, I have to go to bed, I write these things the night before so I have time to check in the morning (yes, I miss typos but this is a blog; it’s a work in progress with a 15 year record and little to show for it). And obviously I imagine you picked up the sub-sub-gag in this week’s title. But just in case. Ok, I love you and speak soon.