I really enjoyed Gary Marcus on the Ezra Klein podcast. Marcus is a significant figure in AI having launched quite a few companies but is also a good, measured cynic. I found myself nodding along because his over-arching thesis is exactly right (to me, at least); AI is great, could do amazing things, but we keep going for low-hanging fruit and wow-effect rather than celebrating and focussing that are actually going to deal with the massive problems we have. Eg. Why has ChatGPT got so much bang while protein folding is basically received with a whimper?

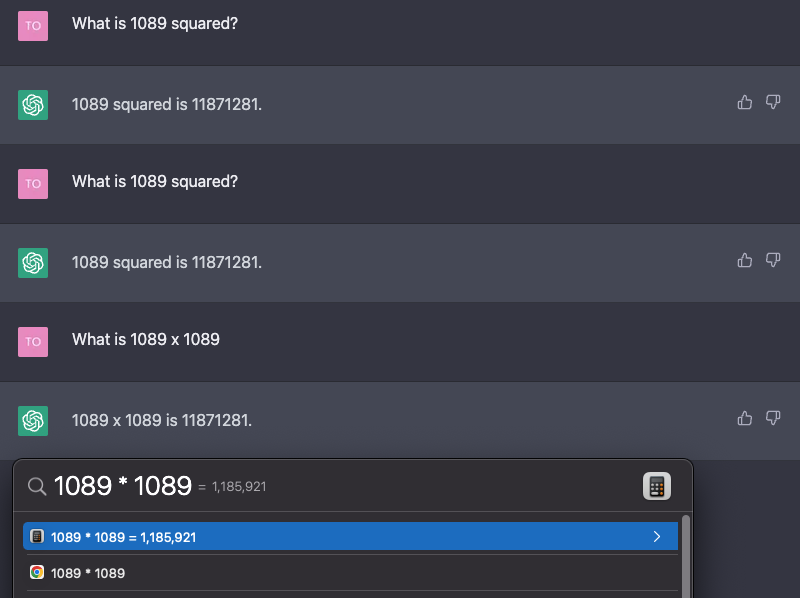

He also made a really compelling historical point about the prevalence of neural nets: They’re very good at probabilistic reasoning and seeing patterns, but in most instances where we interact with computers we want symbolic reasoning. We want 2+2 to always be 4, not 2+2 to most likely be 4. Neural nets can be super useful for things like speech recognition where most-likeliness is a useful heuristic. Bit in the case of, for instance, driving a car (MWSTLOT*) down a highway, you don’t want ‘most likely’; you want thing-equals-thing every time. Marcus makes the point that most of the systems that we have come to rely on in computation such as databases, word processing, spreadsheets, emails etc. are reliable because they’re symbolic; you get the same thing every time you do a massive bit of data crunching in Excel because the numbers always mean the same thing.

*May We See The Last of Them

A speculation on the next few years of AI vapourware.

Microsoft laid off 10,000 employees last week in parallel with a rumour that it was upping its investment in not-at-all-open OpenAI to $10bn. Commentators have noted how the emergence of Large Language Models might be spooking search engines because suddenly ‘How long should I cook sausages for?‘ might just give you a straight-up answer rather than linking to a lengthy blog with loads of embedded ads or that the first few pages of Google might just become SEO-optimised generated crap and undermine trust and usability. Google has BERT and LaMDA – two of the biggest and thus (by the prevailing rubric of the Unreasonable Effectiveness of Data) – most ‘effective’ LLMs but won’t release them ‘to the wild’ because the risk to reputation is so great and because, in the post-Snowden, post-Cambridge Analytica years, the big tech companies take risky bets with data and new technology seriously.

The sudden threat of ‘upstart’ companies like not-at-all-open OpenAI ‘disrupting’ the GAFAM Empire has sent them into overdrive mode ‘ …and shaken Google out of its routine. Mr. Pichai declared a “code red,” upending existing plans and jump-starting A.I. development. Google now intends to unveil more than 20 new products and demonstrate a version of its search engine with chatbot features this year…’ The language implies Alphabet being reactive and war-like saying that it ‘…could deploy its arsenal of A.I. to stay competitive.’

This massive turning of the ship comes amidst a post-Covid definitely-is-a-recession where the big tech giants are laying off staff and buckling down for a slower market. Google’s parent – Alphabet – laid-off 12,000 people (roughly 6% of workers) from senior leaders to new starters last week with more coming for non-US employees.

So, we have a landscape where, because of global conditions, there’s a shrinking market for tech products, web services and advertising which is the revenue that underpins GAFAM. At the same time we have new, smaller AI companies moving at remarkable speed but with untested and unregulated advances in LLMs, diffusion and transformers. Unlike the previous boom around GANs, these new advances are much more ‘realistic’ and usable and so have had enormous public impact and significantly raised expectations amongst consumers. But, as we know, these new companies don’t (or no longer) have the same guardrails or hesitancy about putting untested and unregulated products in the public domain to build hype.

So, my speculation: In response to the new threats and shrinking economy, big tech are forced to compete by lowering the guard rails and releasing more of their unfinished, unpolished and untested products into the wild to grab attention in a dwindling market place (we’ve already seen this with Google’s announcement of 20 new product is in 2023). The imperative becomes ‘speed of release,’ not ‘quality of product’ and we end up in a race-to-the-bottom of badly designed, ill-conceived and rushed AI that could not only cause social harm but also damage the actual science by distracting resource and attention from meaningful and useful AI projects. We end up with years of AI vapourware and overblown hype waves.

The ideal scenario would be governments developing clear-eyed mission-oriented AI research strategies and agendas aimed at tackling the climate crisis. A sustainable vision for investment that provides a long-term framework for the tech industry to regather and rebuild around meaningful applications of AI, carefully released and tested with good policy frameworks for support. You get strategically well-considered products and services that actually improve quality of life and start to actually positively the things people care about rather than distract for them. Or, as Gary Marcus put it, a CERN for AI.

What are the chances?

Another prediction: Node-based AI

Part of the Google thing is the development of second-order tools: In other words, tools that help people who aren’t computer scientists make their own AI, like how Photoshop allowed people who aren’t computer scientists to do computer graphics. There’s a couple out there swimming about or there are plugins for eg. Unity that connect an API up. But the real thing is going to be the first node-based AI software that allows you to home-brew AI really easily. Of the ‘I need an AI that can do this specific function real quick.’ Like single-task, issue-based interactions.

To think how easy the kids are going to have it! I had to painfully trawl through ml4a to get shonky S2S neural nets to play nice!

PhD

Poured a good 45/50 hours into that bucket last week and all I got was a measly, disjointed argument. Little bit deflating but the flow was enjoyable. I’ll give you a bigger slice of that particular pie once I’m happier with it. Back to eaking out the small hours for a little bit.

Short Stuff

- How far could you travel by different means of transport with a limited carbon budget?

- Tobias Rees tweeted about his kids using ChatGPT and yes, it brought me cheer. I am not doomy on the tech itself, just on the hysteria around the tech. There is something amazing about a tool that opens up imaginative space for folks.

I need to turn my mental ship around myself. I get so angry about bad AI hysteria and I need to move through that stage into doing something about it. No-one’s going to read my PhD after all and really the only thing that matters is 1.5 degrees so how can I bring the two together? I’m in good spirits generally at the moment so need to use that as a lever to get over this hump. Love you.