Grim old news at Revell™️ HQ: I had a pretty bad cycling accident in August which meant some metalwork and a complex operation in my leg and a couple of months of physio. I haven’t mentioned it hugely here, it’s more an Instagram thing. Anyway, over the last two weeks I’ve been in a lot of pain and had really reduced mobility after generally doing well and it turns out that’s because it’s all gone wrong and the metal’s broken and come loose. It means I have to start again; they have to replace the whole thing and I have to go back to square one.

Physically, it’s ok and I can cope; I have a high pain threshold (I think, it’s one of those subjective phenomenological things I’m always fascinated by) and can generally adapt, but it’s pretty spiritually devastating. I hated my time on the ward; it’s full of men in pain who express that pain through anger and spending ten days in a ward with three or four of them winding each other up to get more and more aggressive towards the staff was horrible. It also means I’m further away from returning to cycling which really is all I would ever do if there was nothing else I had to do. Since I was out of hospital in September I’ve just been deliriously clinging to the feeling of being back out on the road zooming up and down lanes, and now that’s been pushed even further away.

So this week I’m looking at going in and out a lot while they do tests to figure out what went wrong and decide how to fix it, which will, within in a week be some for of urgent surgery. But, moving on to ranting about AI:

Generation No Thank You

You know, for all my reading and writing about AI, imagination, creativity and so on I have never ever used any image or text generator nor have I ever tried to or really have any interest beyond the morbid fascination I take in watching others gleefully post images and ‘conversations.’ (This is a recurring pattern, I’ve noticed I’m less interested in playing video games than watching others play.) It’s something I’ve been trying to understand about myself because ‘playing around’ is such an important part of how I think about my practice and the ludic, critical designer bit of me is saying ‘you keep going around telling people to get their hands dirty to understand things better so what are you doing?’

There’s something inherently undesignerly, unrigorous, unthoughtful about AI text and image generators which makes me bristle. When I built my own really crappy models and training data sets, back in the scrappy old days of ml4a and GANs there was a feeling of frustrated exploration; ML was still reasonably hard in 2016/17 especially for a dumb-dumb designer. You had to learn all the terms and logics and processes and get your head around this really difficult set of concepts about epochs and dimensionality and so on and – in a critical designerly way – see how the choices you made affected the outcome. But these text-to-whatever systems don’t allow you to get to that feel of play; the model is already trained so you’re just spaffing words into a box and sort of giggling like an idiot at whatever comes out. It’s just the tendency of interfaces to alienate you from the mechanics of what’s happening. I get that, but I don’t know what I can learn from fuelling these things.*

This week (maybe last) saw the release of ChatGPT – OpenAI’s not at all open new large language model. New generators are coming so thick and fast now that the hype is starting to die out a little but there’s still a feel of giddy excitement at the novelty of them whenever a new one is released and people eagerly share the features they’ve discovered like we did with smart phones in the early 2010’s. Rob Horning (of Real Life, RIP🪦) wrote one of the better pieces on creativity in AI generators following its release:

…the fact that AI generators don’t care about what they produce is integral to their appeal. It makes consumption the locus of creativity, because it is produced by agents without intention. Whatever meaning one perceives in it can be felt as authoritative.

One could try to re-objectify generated content by trying to understand it in terms of the statistical weights and measures the model develops, or the biases of the data sets used to train them. But such calculations are fully unimaginative, occurring at a scale that is unimaginable. They are performed without any leaps of intuition that would open them to interpretation. So all the imagination can only be added after the fact and serves to elucidate not how the content was made but how ingenious the consumer is in responding to it in some way, picking it out from amid the all the other generated material for special attention.

That helps explain why AI-generated content is readymade for social media posts. It allows people to show off that creative consumption, how clever they can be in prompting a model. It is as though you could have a band simply by listing your influences.

Horning really hits on something here. I’ve underlined some bits which leapt out at me: ‘Whatever meaning one perceives, it can be felt as authoritative.’ In other words, the system has no authorship, no intentionality, no design so whatever you chose to read into it – however you (as the viewer/reader) chose to present it – is right; there’s no prior context, theory or idea. This means people can make up all sorts of utter shit to justify their interpretation such as using GPT to generate an interview with an artefact of midjourney, or colonising the aesthetics of indigenous cultures. The lack of actual creative impetus in the generator means there’s no-one to either literally or figuratively argue with and no recourse for a dialogue about what the artefact means – it’s a hollow shell and it means whatever you say it means. This gives users an enormous sense of cultural power and very much taps into the ‘magical’ tendency of tech: These images or chunks of texts are golems; fully crafted and totally at your command but devoid of any meaning or intention.

(This is very different from good AI art, where the ML is used as a critical tool and there is very much the authority and voice of the artist driving the viewer to think about or engage with some particular idea, usually to do with a critical issue of the technology. And importantly, that voice and intention has developed from learning and experimenting with the AI.)

Because of this lack of actual authorship, all the meaning, imagination and so on is projected on it ‘after the fact’ as Horning says which leads to his point that it ‘…allows people to show off creative consumption.’ The value in creating and sharing AI images and text is to use them to smugly show off how smart or insightful you are at gaming the system. As Eryk Salvaggio has said, the process of text and image generation is more akin to playing a game than actual creativity, constrained as the models are by their structure and training data so that they cosplay novelty as creativity: ‘It is as though you could have a band simply by listing your influences.‘ By using interesting images to list how novel and exceptional you are at using these systems, you roleplay at being creative without actually having created anything. It’s all so kooky and weird or; it’s like having a golem and calling it your best friend because it does what you want.

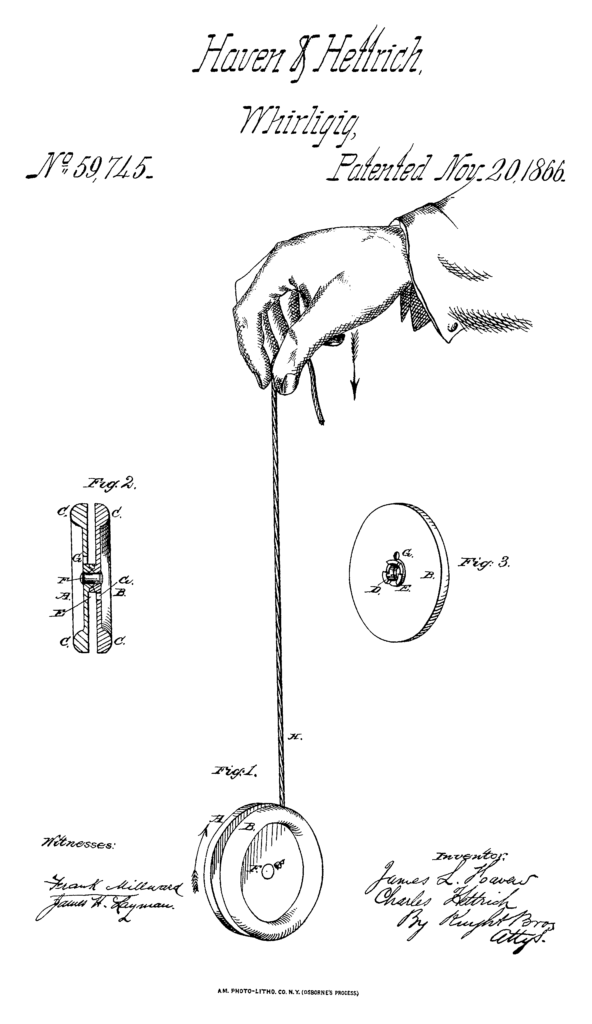

When I was a kid, there was a brief bit where yo-yo’s were really cool for maybe half a year. There were all sorts of different ones with gyros and features and lights and colours and sounds and limited editions and everyone spent their school breaks learning new tricks that they saw in books or magazines. No one actually invented the yo-yo or took them apart to understand their working or attempt to create a new device or toy, or function, or form of game. That’s sort of what’s going on; except the yo-yo’s are the generators and the tricks are social network posts. Nothing’s actually being created, it’s just social jostling. Meanwhile, the adults are getting on with protein folding.

So there’s an un-serious frivolity to generators which makes me doubt their value as creative or critical tools. But this doesn’t really get at why I’m wary of getting my hands dirty with them – after all, I’m all about un-serious frivolity. I’m critically conscious enough not to be dazzled and drawn in by the spectacle so I don’t think it’s a concern of intellectual integrity. I think part of it is wishing to maintain some ‘ethnographic’** distance – to watch the different approaches and discourses emerge and develop a sense of the field before identifying the best place to intervene. Part of it is probably just snobbery and my general aversion to hopping on hype-trains.*** Who knows. I guess that’s why we’re all on this thrilling journey together. Yawn.

*I will caveat by saying that it is kind of cool and fun how every time a new generator is released everyone piles on to try it out, and there is something about that revealing of perspicacity and playfulness in the ‘community’ that is quite joyful (see Short Stuff) but only a few people I think are getting that balance of srs intellektual enquiry + messing about right. Most of it is people loudly remarking on their own genius for putting some words in a box. But magic after all is fun and thrilling and exciting and I won’t take that away.

**LOL

***I think the underlying thing to think about any AI presented to you, no matter how remarkable it appears to be is; ‘this is just the best current form of statistical analysis available to me’ and then proceed to play with it. Don’t go in with expectations of magic or spectacle; start with the assumption that it is just a very fancy Excel spreadsheet and read it through those eyes.

Recents

I wrote a chapter with Ben Stopher about the future of design schools that has now been published. We explored four speculative scenarios of what might happen to the design school based on near-future trends: We looked at deepening and increasing automation and data-driven learning; more marketisation and centralisation with a focus on employability and profitability; decentralisation and atomisation into temporary and de-institutionalised settings and then finally the sort of erasure of design school altogether. It was fun to write (and surprisingly quick), check it out.

Short Stuff.

I’m still mulling over my feelings about preferable futures and the anti-dystopia movement of recent times. I get it totally and fully agree in the value of stories of hope. Especially as many of them have moved from critical and protest movements around the world. But something still worries me about being willfully blind to how bad things could get. I don’t know. I’ve parked it for next week (if I’m able to blog next week). Alright, short things:

- J’adore ‘Text to Everything’ as described by this mega-thread. A+ mic-dropping.

- Scott Galloway’s very good, sober and balanced analysis of the state of AI, with some barbs at the crypto grifters.

- A piece in the American Affairs Journal about the current tech bubble and it’s fundamental uselessness when it inevitably collapses as well as the research context that got us to a place where the separation of academia and industry means that industry produces crap products (my words but see below) and academia produces crap papers.

- In amongst the (ok somewhat justified) hype around ChatGPT, Facebook (what Meta actually is) released Galactica – a language model based search engine, which was a terrible idea. It only lasted three days, managing to conflate language with knowledge in another nail in the coffin of the death of social networks. You have to ask why apparently massive, experienced tech companies are releasing unfettered shit into the world oh yeah.

- Former colleague (and design hero, tbf) Ramia Mazé interviewed here on design for policy making.

- Goes a bit to Horning’s point of ‘AI generators just serve the purpose of showing how cool and connected you are but someone built a machine inside ChatGPT for no reason at all.

I’m not particularly happy with this post. There’s too many loose strands of ideas and open-ended provocation. But this is a blog not a journal so I’m under no obligation to be coherent or well-evidenced. I’m trying to figure out why I feel and think things about things. (Not, like, as a whole, specific things.)

I don’t know about you (you rarely write back) but there’s something on the wind isn’t there? Something about the flavour of social politics feels different. Not hopeful or exciting but not despairing either, as it has been for so long. Just different. I don’t know. Another thing to think about. Loveyoubye.