I decided not to post this yesterday, the feed were busy. I was up listening to the election results come in from the US. I went to bed thinking “I can’t believe they”ll win” (just as I did twice in 2016), I’ve woken up to “oh it won”t be so bad” which made me laugh out loud having read a fascinating appendix about terror management theory this week.

It’s Your Fault if it Doesn’t Work

Last week I mentioned the talk I gave at Orgatec in Cologne. Well, immediately after that I was meant to shoot across the country to Eindhoven for the wonderful Design and AI symposium to take part in a panel that very afternoon. However, due to the massive catastrophe that is Deutsche Bahn (that plenty of people had warned me about) I didn’t make it. I thought, given I had 6 hours in which to make a 2 hour journey, that even with the delays and problems, I’d get there.

Anyway, I didn’t but I made it for the morning keynote the next day: It’s Still Magic Even if You Know How It’s Done. Now, where I’d spent two weeks dragging the previous day’s talk up from my brain and out from render farms, this one I just sort of threw together the day before. It was broadly a meander through the bulk of my PhD work so in that sense, it wasn’t that difficult and I was able to draw on a lot of the material I already had,

Of course the title borrows from one of the two ways Terry Pratchett has put the sentiment down that magic is knowable but still is also magic. In Pratchett’s case he is making a case for magic (which I wholly support) while I was, in the context of the theme of ‘enchantment’ talking about how we can both know that AI is a technical, computational thing while also be convinced to feel that it’s magic, usually to try and sell us impossible dreams. You’ve heard all this stuff before. The talk went really well. I hope they recorded it. If not, it’s probably worth writing up here at some point although I probably won’t be able to capture that old Revell-is-winging-it charm.

But, what I wanted to write to you about is: Later that day I experienced both a sense of vindication and slight guilt. Someone from a Very Large Technology Company was on stage later in the day to deliver a presentation as part of a session on ‘industry Perspectives’ and was in the unfortunate (and I think, knowing) position of having to repeat all the tropes, narrative tricks, rhetorical and metaphorical sleight-of-hands I’d just been critiquing not an hour or so before. This, paired with the odd Freudian slip (the phrase ‘replace journalists’ was very briefly left hanging in the air before a rapid backtrack to ‘augment journalists’) the use of big numbers and graphs showing things like ‘scale’ and ‘growth’ (again, which I’d talked about being used to sell speculative fantasies) I can only imagine this was a difficult talk to have to give in the context of what I’d just walked the audience through.

It’s Because You’re Not Sufficiently Courageous and Curious

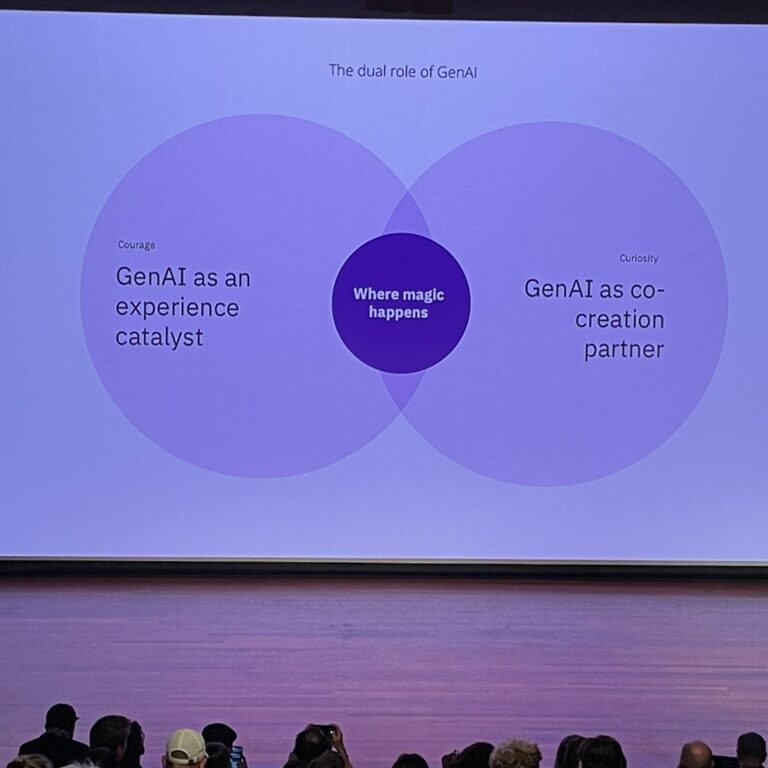

The narrative conceit of the talk was how ‘courage’ and ‘curiosity’ ‘make the magic happen’ in AI. There’s a lot to take in there. The obvious part is the reliance on the word ‘magic’ to explain the value of this thing. And again, I’d just stood on stage and dissected how magic is used to over-inflate the efficacy of technology as well as make its operation and construction opaque. But the part that was new to me was the constant circling back to the need for us – the potential user or client – to be courageous and curious in order for the AI to work. I’m paraphrasing but the gist of it was ‘your courage and curiosity plus our technology.’

The preface to this was ‘generative AI is new and confusing and difficult’ but you can make money off it if you are sufficiently courageous and curious. In other words; it’s not up to us to tell you what this thing is for or how it makes your business/service/product better. If you can’t see that it’s because of your own failings and I think it was the first time I’d seen it put so plainly.

There’s obviously a huge amount out there at the moment about the lack of tangible utility or ROI of generative AI for real contexts, whether at work or home. Some great examples of isolated tasks and my working theory is much more benefit to people who are information rich and time poor (like freelancers or small businesses) but as a general, paradigmatic shift in both work and economy, it’s not particularly weighty. The implication of the logic from the Very Large Technology Company is that that’s your problem. That if you’re not using generative AI to great effect it’s because you’re not taking enough risk, you’re not thinking big enough. These rhetorical tricks don’t feel that unusual (think of Diesel’s ‘Only The Brave’ which also implies that it’s up to you as the consumer to live up to the expectation of the brand.) But that’s about a cultural identity or fashion item (or perfume actually) – it’s an aesthetic choice.

I wasn’t there (I was doing other things like being silly and having fun) but I imagine there were people from this Very Big Technology Company going around the world in the early 2000s and saying to people at conferences ‘You know how much you spend on business travel? You can spend less on video conferencing and it’s quicker and more people can do it so it’s better.” And people in those audiences probably looked at a number which looked better and went ‘yeah ok.’

I doubt (but again, I was being silly so can’t testify to it) that they walked on stage and said ‘are you going to pay for video conferencing or are you an intellectually stunted, snivelling coward?’ Because videoconferencing is self-evidently cheaper and more effective than expensive flights for international communication. But, because generative AI isn’t self-evidently useful in any significant way other than for novelty and performative futurity, you have no option but to use a handful of slightly ethically dubious, highly experimental and probably carefully edited examples, wave the made-up big numbers from widely discredited consultant reports around, flash an s-curve and imply that anyone who doesn’t stump up cash is a craven luddite.

PhD

The above was a new one has been added to my ‘AI myths’ lists. I think other people have been making these lists of AI myths too but this week I’ve been bingeing on PhD to try and get over a bit of a hump and get the gears going again. This has largely also been about transitioning my brain into Obsidian and doing things like, yes, ordering and compiling lists of rhetorical tricks used in AI.

I’m not going to deep dive into what I’ve been doing (I did but I deleted it), I’ll save that for another week but it’s been an interesting process of doing something I’ve wanted to do for years in setting up Obsidian and letting it emerge through an intense but organise process of 5 or 6 15-hour days with my head fully inside my PhD. I’m also writing this post in Obsidian right now. I’m using tags with anything I put on the blog so I can also link that into my ‘second brain’ (God, as I said to Maya, I sound like a linux bro saying these things). Look I’ll post a screenshot.

That’s what I’ve been staring at for days now. I haven’t got anywhere near as much done as I wanted but I haven’t wasted a minute either so you know, I don’t feel bad about it.

Reading

I don’t post everything I read here by the way. Just things that are interesting or useful or feature friends.

- Beth Singler’s book Religion and Artificial Intelligence is out. I haven’t read it yet but I’m going to.

- After software eats the world, what comes out the other end? asks Henry Farrell. Nothing hugely surprising here about everything being centripetally pulled towards a cultural medium by LLMs but some good links.

- Sean Johnston’s The Technological Fix traces the origins and impacts of the post-war idea that technology could and would fix all social problems. Unsurprisingly perhaps, the perspective is rooted in nuclear science; by creating weapons that would eliminate the need for further conflict and a power source that would mitigate scarcity, the men of the time believed they would end the root causes of social ills through ‘applied science.’

- Casey Newton’s pretty thorough examination at the start of Google’s anti-trust showdown.

- Melting ice is potentially slowing the rotation of the Earth.

- Tanager-1 spots its first methane plume out of a landfill in Pakistan. You go little guy.

- I’m Running Out of Ways To Explain How Bad This Is. When your distrust of political institutions and belief in your own worldview outweighs the admitted fact that information you are creating, sharing and disseminating is made up or false.

- Apparently The Whisperverse is the future of mobile computing with augmented reality plus AI inside your head. I sort of like this idea, I could see it being charming and enjoyable and helpful but it all relies on magical terminology as usual.

- Amongst my reading Consent in Crisis was long but interesting. Basically; websites are increasingly guarded against AI scrapers through their robots.txt. However, this means the bulk of scraping is less and less diverse as the ‘long tail’ of the web fails to scraped into models. The authors show, for instance, that news sites make up about 40% of tokens in C4 (the common crawl dataset they examined) but only 1% of ChatGPT queries. While the most popular are creative composition makes up almost 30% and sexual content almost 15% of ChatGPT requests. The authors argue that this means a misalignment with heavily biased outcomes.

Listening (and watching)

I don’t watch much TV and (this is going to sound outrageously pretentious) I feel better because of it. I don’t get dragged into staying up past when I want to, I don’t feel like hours just vanish into nothing. But I’ve been watching Industry while on the turbo trainer and there are two major problems: First, the finance babble makes no sense but there’s enough tense eye contact or knowing nods to get a read on whether what one character finance-babbles is good new or bad news. The second thing is I can’t tell the difference between the foppish private school boys. I’m on like episode 5 or 6 and Rob, Ross, Greg, Steve, Simon are all interchangeable to me. Because the writing jumps quite a lot as well, just right over situations or events, they could conceivably all be the same foppish private school boy and I just missed that subtle editing queue. But in listening:

Courtney LaPlante joined by Tatiana Shmayluk to duet Circle with Me is the birthday present I wanted, thank you and I love you. Speak to you next week.