I’ve been very much a ‘fairweather’ cyclist this winter. I failed to pick up my winter mojo so whereas last year I was giddily splashing through puddles in the pursuit of the Festive 500, some personal life changes and strange adversity to getting muddy means I’m spending this winter an hour or two on the trainer per day. This isn’t as dispiriting as it might be but perhaps I’ll get it together next year.

DS059

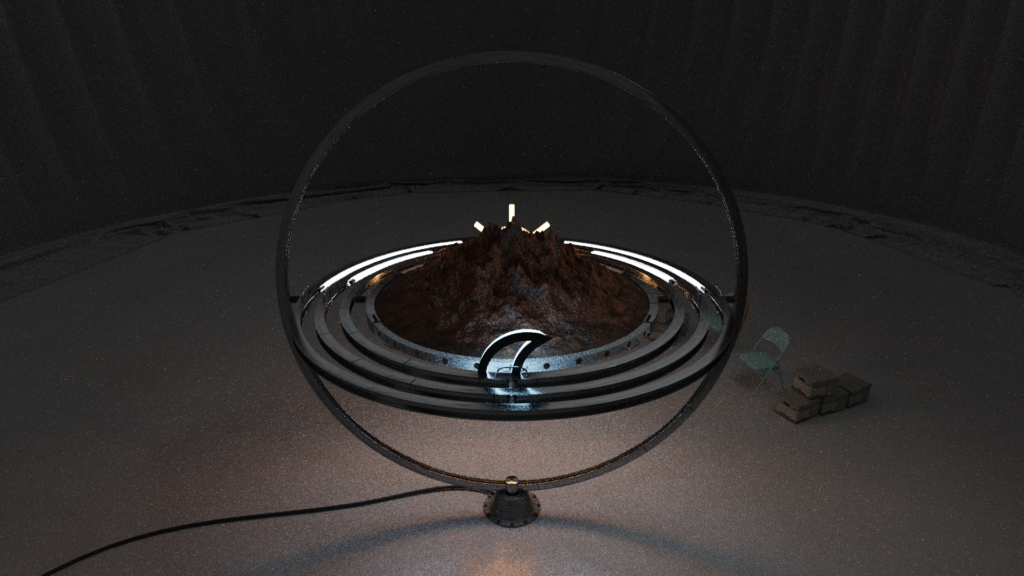

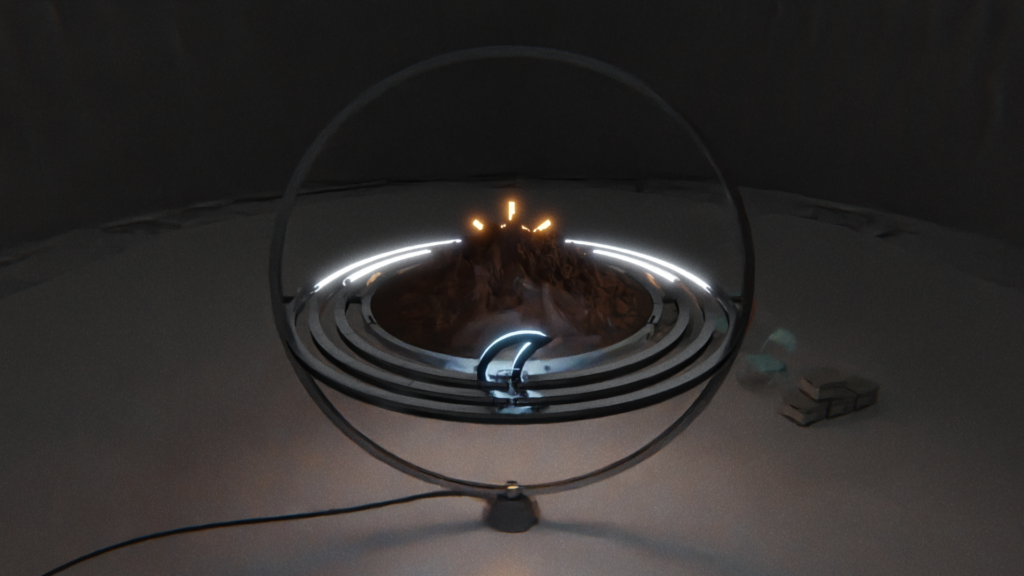

I started this week messing around with some procedural terrain generation using displacement textures. Once I had, that building the gyroscope was actually quite simple. Each ring simply rotates 180 degrees in four seconds around the appropriate axis, each one parented to the last so it inherits the rotation of the ring it’s ‘inside’ as well. It has to be rendered in Cycles because of the moving light sources so I spent most of the time messing around with the de-noising functions in Cycles.

This is a 400 frame animation, each frame has 128 samples to keep rendering relatively brief (still took about 8 hours). Because of the complex lighting and shiny surfaces, the outputs come with a lot of noise and ‘fireflies.’ Fireflies are overly-bright pixels created by the algorithm going a bit nuts trying to work out where the light goes. Luckily Blender has these great de-noising functions now which can really smooth out these artefacts, but the tradeoff is often that it can be overly-zealous and some of the detail can be lost. Or it can even ‘invent’ noisy detail which can be its own sort of fun.

I really can’t tell you how great these de-noisers are, to be honest. If you don’t do rendering you don’t know the hours and hours of misery that used to be poured into tweaking lighting settings and running and re-running renders at higher and higher quality to get something that didn’t have artefacts all over the place.

Machine-Illegible Literature

I finally got around to reading the Unreasonable Effectiveness of Data. This paper is considered a significant contribution in the field of machine learning and the first part details the process of using ngrams to teach computers language. The super short version of the method is that it choses the most likely following words from what went before based on increasingly huge corpuses of human writing.

There’s quite a number of books and chapters written with/by so-called AI now out there using this and similar methods. For example, Kenric McDowell has been working on various things for a number of years but it’s pretty commonplace now to punch a couple of prompts into GPT-3, get some words back and call it ‘a art.’ These projects serve as interesting insights into the state of the technology and the potential for creative collaboration even if sometimes the rationale is illegible without a good dose cutting-edge theory. However, one thing I haven’t seen and would probably be more interesting is the least-machine-readable book or chapter.

What if a time-rich artist or writer sat-down with GPT-3 or some ngram algorithm and reverse-engineered it? Instead of progressing through the most-likely series of next words, similar to Erica Scourti’s amazing work with autocomplete, this project would mean choosing the least-likely next words. This would take an enormous amount of human authorship and labour. The exact aim would be to automate as little as possible in order to avoid the influence of algorithms that predict what should follow from any word or combination of words. Even spelling and grammar checks are based on rudimentarily probabilistic models that would have to be avoided. The author of such a work would effectively be manually mining ngrams for the least likely combination of words that maintain some meaning, no matter how vague.

The notion of ‘meaning’ is really important. Works like CV Dazzle, machine learning attacks, machine vision hacking or other pattern-breaking systems make visuals machine-unreadable while still retaining some meaning to humans; either as beautiful image or pattens, statement pieces or protest imagery. In fact these and many like them are useful precedents of how critical images expose the technical workings of machine learning. But the book couldn’t simply be a jumble of misspelled words approached in a formulaic way in order to appease the criteria of ‘least-likely’ – it would have to be the least-likely combination or words that still retains some meaning, even if that is purely aesthetic. This is somewhat an updated Oulipian approach – treading the balance between computational, formulaic and meaningful in literature.

This process of manually mining for least-likely sentences is somewhat reminiscent of one of my favourite pieces by Femke Herregraven – Subsecond Flocks – in which the thousands and thousands of financial transactions happening in a few milliseconds have been hand-cut into acrylic. There’s something really interesting in the practice of critiquing automation through apparently menial labour: That by intentionally pursuing hard, human tasks, you can show the work that is done. Tom Thwaites’ Toaster Project is an obvious example of this in hand-building a toaster from scratch. This isn’t a form of practice new to critiques of automation or industrial processes though. Alfred Gell writes about how much of the enchantment of great art is in the viewer imagining (or struggling to imagine) the mode of production. He suggests that it’s in this gap between the outcome and the perceivable work that viewers of art (particularly religious art) through history have imagined the hand of god/s or the supernatural.

The books that have been coming out from art publishers written with/for/by so-called AI have their own sort of charming aesthetic gibberish. A gibberish that is interesting and somewhat fetishised in the same way that Deep Dream images were when they first broke onto the scene. They have their own particular weird which can’t be denied and fascinates artists. I imagine the awkward dreaming metaphor also had a strong influence. This book equally would have it’s own, different kind of weird, but rather than a weird based on the most likely progression of a limited data set, it would be the least-likely of a potentially infinite one.

Short Stuff

- Stories from 2050. A bunch of narratives of alternate futures (are all futures alternate if they’re not present?) based on expert interviews and community contribution from a consortium of European researchers.

- Yesterday Once More, a piece from Grafton Tanner about automated nostalgia. His book, Babbling Corpse is one of my favourites. I like to think he borrowed the opening gambit on Joan Serrà’s research from stuff I’ve written a bunch of times about the same thing but I doubt it. There’s a really good breakdown and description of the feedback loop between automated production and consumption of media that I’ve tried to articulate not even half as well many times. ‘You are what you eat’ I guess would explain it: ‘Predictive algorithms don’t really predict anything; they just make certain kinds of pasts repeatedly reappear.’

- I missed this back in September (found it via Sun-Ha Hong’s ‘Same Old‘ which is another perspective on the lack of imaginable futures story worth reading) but it seems ML developers have moved on from Turkers to using refugee labour to tag datasets.

- The Shady Business of Selling Futures and exculpatory piece from Devon Powers on the proliferation of the business of futures and the reinforcement mechanism that drives it. There’s a western technocratic modernist history hear which I’d love to see in a one-shot. I think Scott Smith was working on it once when I spoke to him.

- This tweet about NFTs. Warning: It’s from philosophy twitter.

Alrighty. Welcome to 2022. Feel different, no? I’m not doing a year in review but I am planning a review of predictions for next week which might be great, might be just ok. See you there. Love you as always.